Graphing the Future: Harnessing the Power of Graph Neural Networks for Cybersecurity

Introduction

The Evolving Landscape of Cybersecurity

The digital era has ushered in unparalleled technological advancements and global connectivity. As our reliance on digital infrastructure grows, so does the sophistication and frequency of cyber threats. Organizations of all sizes grapple with escalating risks of data breaches, ransomware attacks, network intrusions, and various forms of cyber espionage.

Traditional approaches to cybersecurity, such as rule-based systems and signature-based detection, have proven to be inadequate against the ever-evolving and highly dynamic nature of cyber threats. As cyber attackers employ increasingly sophisticated techniques and exploit complex network topologies, the need for innovative and adaptive cybersecurity solutions becomes imperative.

In response to this escalating challenge, the field of Artificial Intelligence (AI) has emerged as a promising ally in fortifying digital defenses. Among various AI techniques, Graph Neural Networks (GNNs) have garnered significant attention and acclaim for their ability to effectively tackle cybersecurity challenges.

The Power of Graphs in Cybersecurity

Cybersecurity is inherently a problem of graph analysis, as malicious activities in digital systems are interconnected and can form identifiable patterns. Graphs provide an intuitive and effective representation of these complex relationships, where nodes represent entities such as devices, users, or applications, and edges capture the connections and interactions between these entities.

For example, in a network intrusion scenario, each device in the network can be represented as a node, and the network connections between the devices as edges. Anomalous behavior, such as unusual traffic patterns or unauthorized access attempts, would manifest as patterns within the graph.

GNNs, built upon the foundation of graph representation learning, hold the promise to effectively analyze these interconnected relationships and uncover hidden patterns in cyber data. By leveraging the power of GNNs, cybersecurity practitioners can enhance threat detection, identify anomalies, and respond proactively to emerging cyber threats.

The Journey Ahead

In this blog post, we will explore the synergy between Graph Neural Networks and the domain of cybersecurity. We will delve into the fundamentals of GNNs, understand their working principles, and explore their various applications. Lastly, we will showcase a specific example utilizing GNNs as an intrusion detection system addressing their unique challenges in order to equip security professionals with the knowledge to harness the full potential of this cutting-edge AI modeling approach.

Graph Neural Networks

In recent years, the field of artificial intelligence has witnessed remarkable advancements leading to the development of sophisticated machine learning techniques. Among these techniques, Graph Neural Networks (GNNs) have emerged as a breakthrough in the realm of graph representation learning. GNNs have garnered significant attention for their unique ability to process and analyze graph-structured data, making them an ideal solution for tackling the complexities of interconnected systems.

Before the advent of GNNs, traditional machine learning approaches faced inherent limitations when dealing with graph data. Conventional algorithms, such as Support Vector Machines and Decision Trees, rely on fixed-dimensional feature vectors and fail to exploit the inherent relational information present in graphs. Consequently, these approaches struggle to effectively capture complex dependencies and patterns in interconnected data.

Graph data possess irregular connectivity patterns, where each node may have varying numbers of connections (neighbors), and the order of nodes and edges can vary without altering the underlying meaning. Such irregularity is difficult to handle using traditional techniques that rely on fixed grid-like structures.

How do Graph Neural Networks work?

To understand the working principles of Graph Neural Networks (GNNs), it is essential to understand the key components that empower these networks to effectively process graph-structured data. Each component plays a crucial role in capturing the relationships and patterns present in interconnected graphs. GNNs operate by taking into account the features (information) of individual nodes and the structure of their interconnections. Here's a simplified step-by-step explanation:

- Node Feature Initialization: Every node in the graph begins with some initial features. In a cyber network example, these features might be a device's IP address, operating system, and installed software.

- Message Passing: In this phase, each node sends "messages" to its neighboring nodes. The content of this message is typically based on the node's current features, which could be as simple as forwarding its current features directly.

- Aggregation: After messages are sent, each node collects or "aggregates" these messages from its neighbors. This could involve a simple operation like averaging or summing the messages, or it could be a more complex process.

- Update: Using the aggregated messages, each node updates its own features. This update is often a function of its existing features and the aggregated messages from its neighboring nodes. This is the part where the node can "learn" from its neighboring nodes.

- Readout: Lastly, after several rounds of message passing, aggregation, and updating, the GNN produces an output. This output could be a prediction for each node, for specific nodes, or for the graph as a whole, depending on the task.

This whole process enables each node to gather information from its surrounding nodes and, after several iterations, from an increasingly large portion of the graph. Thus, nodes and the entire network can capture and exploit the complex dependencies and relationships inherent in the graph structure.

Diving deeper, let's explore the core components that make GNNs effective in greater detail:

- Graph Convolution: At the core of GNNs lies the graph convolution operation, which allows nodes to exchange and update information with their neighbors. Graph convolution is an extension of the traditional convolution operation used in image processing tasks, adapted to work on irregular graph structures.

In graph convolution, each node aggregates information from its neighboring nodes via message passing, generating a new representation that encodes both its own features and the collective context of its local neighborhood. The information aggregation process is guided by learnable weights, allowing the GNN to adaptively weight the importance of different neighbors during message passing.

Through multiple layers of graph convolution, nodes can propagate information across the graph, refining their embeddings and capturing more complex patterns and dependencies. - Activation Function: After the graph convolution operation, an activation function introduces non-linearity to the node embeddings. The activation function allows GNNs to capture complex relationships and model intricate data distributions that may not be linearly separable.

Common activation functions used in GNNs include ReLU (Rectified Linear Unit), which retains positive values and sets negative values to zero, and sigmoid, which maps input values to a range between 0 and 1. These activation functions enable GNNs to learn expressive representations and enhance the model's capacity to capture complex patterns. - Pooling and Readout Operations: Pooling and readout operations are additional components in GNNs that play essential roles in processing graph data. Pooling operations reduce the size of the graph by aggregating information from groups of nodes into a single node representation. This downsampling step helps in managing computational complexity and enables the GNN to handle large-scale graphs efficiently. Readout operations, on the other hand, aggregate information from all nodes in the graph to generate a global graph-level representation. This aggregated representation captures the collective knowledge of the entire graph and is particularly useful in tasks such as graph classification, where the objective is to make predictions for the entire graph rather than individual nodes.

- Parameter Sharing and Learning: One of the key advantages of GNNs is the concept of parameter sharing. In GNNs, the same set of learnable parameters is shared across all nodes in the graph, allowing the model to generalize effectively to new nodes and graphs.

Parameter sharing enables GNNs to process graphs of varying sizes and structures without requiring additional model parameters. This design significantly reduces the memory footprint and enhances the scalability of GNNs, making them suitable for large-scale graph analysis.

The key components of Graph Neural Networks – graph convolution, activation functions, pooling, and readout operations – collectively contribute to the power of GNNs in processing and analyzing graph-structured data. Through iterative message passing and information aggregation, GNNs can capture local and global context, model complex relationships, and unveil hidden patterns within interconnected graphs.

Applications of GNNs

GNNs have several applications in cybersecurity. Here are a few examples:

- Network Intrusion Detection: In cybersecurity, one use of GNNs is for detecting anomalies or intrusions in network traffic data. Cyber networks can be viewed as large-scale graphs where each node represents a device (like a computer or a router) and each edge represents a connection between two devices. GNNs can analyze the traffic patterns on these connections to identify unusual behavior, such as a sudden increase in data being sent to an external server, which may indicate a data breach.

- Malware Detection and Analysis: Malware often involves complex behaviors and relationships among different software components or across multiple systems. By representing these interactions as a graph, GNNs can analyze and identify malicious activities more effectively. For instance, a GNN might identify a previously unseen malware variant by recognizing that its behaviors form a similar pattern to known malware in the graph.

- Phishing Attack Detection: GNNs can be used to analyze email networks to identify phishing attacks. In this context, the nodes of the graph could be individual email accounts, and the edges could represent email communication. By understanding the typical patterns of communication, a GNN could identify when an account starts sending phishing emails, which would be an anomalous behavior.

- Vulnerability Detection: Cybersecurity also involves analyzing software to identify potential vulnerabilities that could be exploited by attackers. Software can be represented as a graph where the nodes are functions or variables and the edges represent interactions or dependencies between them. GNNs can analyze these software graphs to detect complex vulnerabilities that might be missed by traditional linear analysis methods.

- User Behavior Analysis: GNNs can also analyze user behavior within a network to identify potential security risks. For instance, they can identify abnormal behavior, like a user suddenly accessing sensitive data they've never accessed before, which could indicate that the user's account has been compromised.

In each of these applications, GNNs offer an advantage over traditional methods because they can model complex relationships and dependencies between different entities in the network. This makes them a powerful tool for detecting and responding to the increasingly sophisticated threats in the world of cybersecurity.

Case Study: GNNs for Intrusion Detection

The script below utilizes a Graph Neural Network (GNN) for detecting threats in the UNSW-NB15 dataset, a benchmark dataset for Network Intrusion Detection Systems (NIDS).

The UNSW-NB 15 dataset is composed of raw network packets, which were generated using the IXIA PerfectStorm tool within the Cyber Range Lab of UNSW Canberra. The goal was to create a blend of real-world, normal activities and synthetic attack behaviors. To capture 100GB of raw traffic (for instance, Pcap files), the tcpdump tool was employed.

This dataset incorporates nine distinct attack types, including Fuzzers, Analysis, Backdoors, DoS, Exploits, Generic, Reconnaissance, Shellcode, and Worms. To create a total of 49 features, along with the class label, tools like Argus and Bro-IDS were used.

Loading and Preprocessing Data

Firstly, we need to load and preprocess the data. We are going to use the pandas library to load a CSV file containing the network data:

df = pd.read_csv("UNSW-NB15.csv")

Next, we ensure reproducibility by setting seeds and other variables:

torch.manual_seed(0) torch.cuda.manual_seed(0)

torch.cuda.manual_seed_all(0)

torch.backends.cudnn.deterministic = True

The dataset contains both numerical and categorical features. For numerical features, we standardize them by using Scikit-learn's StandardScaler. For categorical features, we apply one-hot encoding:

df[numerical_cols] = df[numerical_cols].apply(pd.to_numeric, errors='coerce')

df[categorical_cols] = df[categorical_cols].astype(str)

df = pd.get_dummies(df, columns=categorical_cols) scaler = StandardScaler()

df[numerical_cols] = scaler.fit_transform(df[numerical_cols])

The IP addresses are converted to binary representation, because it provides more valuable information than the standard string format of IP addresses:

df = ip_to_binary(df, 'srcip', 'ipsrc') df = ip_to_binary(df, 'dstip', 'ipdst')

Then, we encode the 'attack_cat' (type of attack) column using Scikit-learn's LabelEncoder:

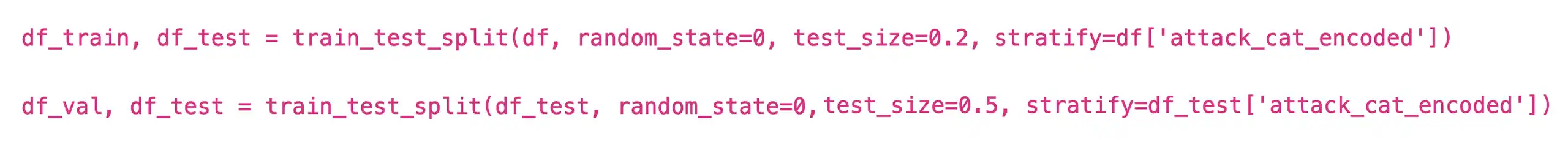

We also create train, validation, and test splits for the model training and evaluation:

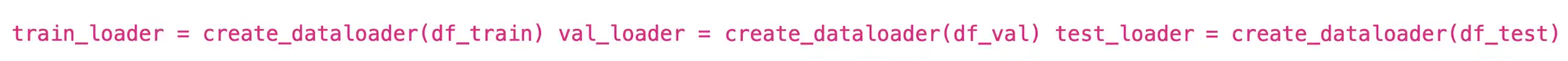

Creating Data Loaders

In order to feed the data into the GNN, we need to convert the DataFrame into a DataLoader that can batch the graph data:

The create_dataloader function prepares batches of subgraphs and constructs the edge connections between the hosts and flows in the network.

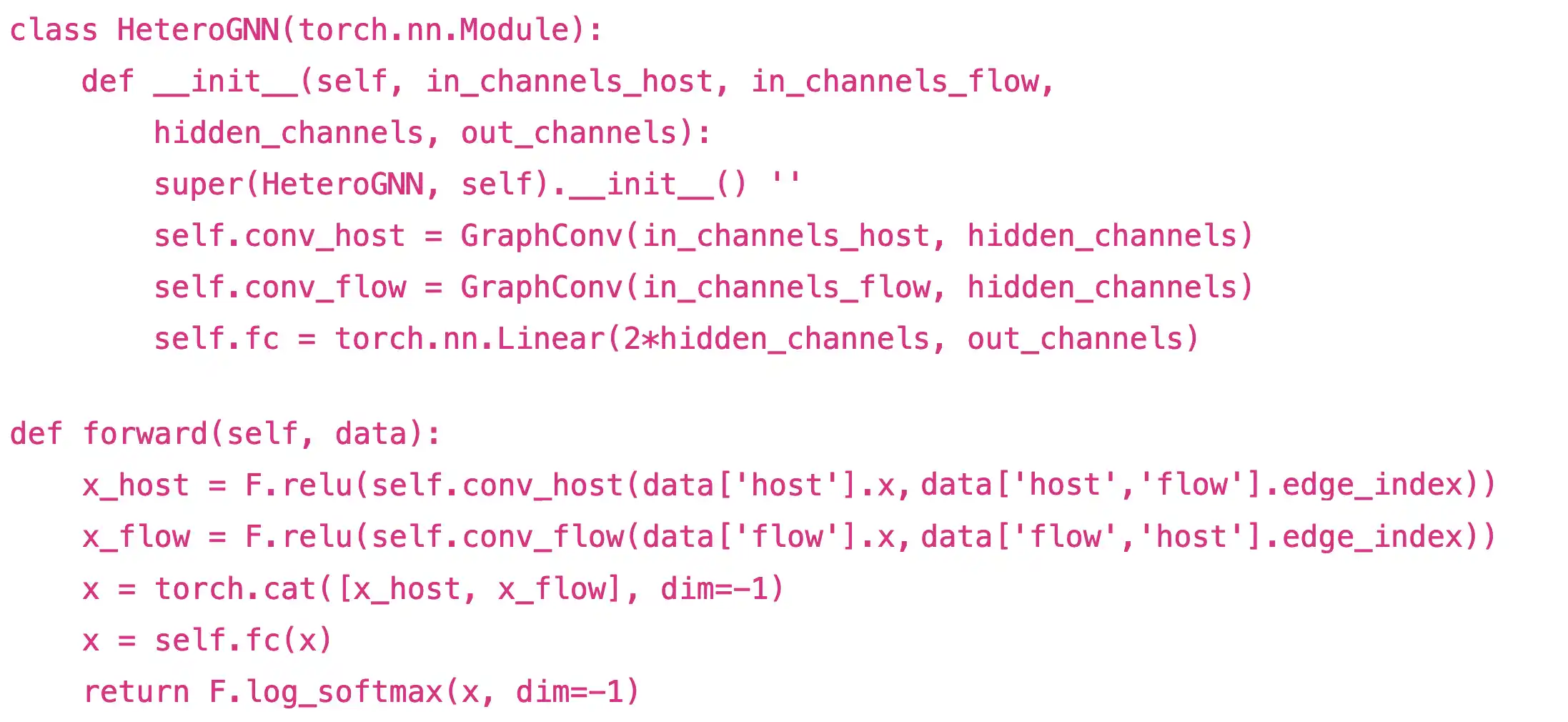

Implementing the GNN Model

We define a HeteroGNN class which is a PyTorch model class:

Here, we define two GraphConv layers – one for host data and one for flow data. The results are concatenated and passed through a fully connected (linear) layer.

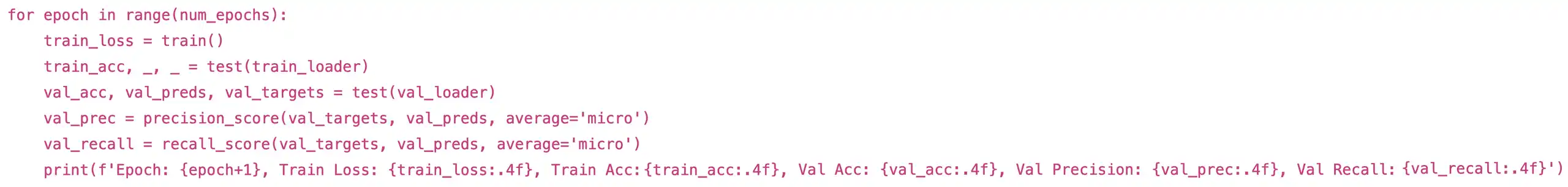

Training and Evaluation

The model is trained using the Adam optimizer and learning rate scheduler, with cross entropy as the loss function. In each epoch, the model is trained on the training set and then evaluated on the validation set:

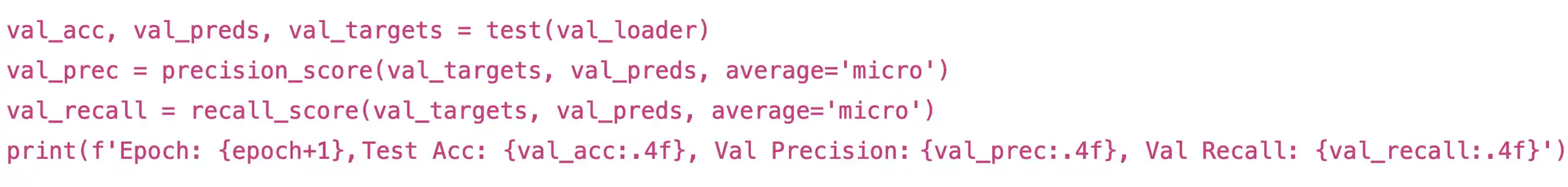

Finally, we evaluate the model on the test set:

We are using metrics accuracy, precision, and recall to evaluate the performance of the model. After training 100 Epochs:

Epoch: 1, Train Loss: 0.2058, Train Acc: 0.9697, Acc: 0.9695, Precision: 0.9695, Recall: 0.9695

Epoch: 2, Train Loss: 0.0801, Train Acc: 0.9722, Acc: 0.9717, Precision: 0.9717, Recall: 0.9717

Epoch: 3, Train Loss: 0.0718, Train Acc: 0.9731, Acc: 0.9725, Precision: 0.9725, Recall: 0.9725

Epoch: 4, Train Loss: 0.0689, Train Acc: 0.9737, Acc: 0.9732, Precision: 0.9732, Recall: 0.9732

Epoch: 5, Train Loss: 0.0673, Train Acc: 0.9742, Acc: 0.9737, Precision: 0.9737, Recall: 0.9737

…

…

…

Epoch: 99, Train Loss: 0.0541, Train Acc: 0.9790, Val Acc: 0.9783, Val Precision: 0.9783, Val Recall: 0.9783

Epoch: 100, Train Loss: 0.0541, Train Acc: 0.9791, Val Acc: 0.9783, Val Precision: 0.9783, Val Recall: 0.9783

We achieve the following total accuracy, precision and recall respectively on our test set:

Test Acc: 0.9783, Val Precision: 0.9783, Val Recall: 0.9783

In conclusion, the use of Graph Neural Networks (GNNs) for network intrusion detection holds significant promise for enhancing the performance of Network Intrusion Detection Systems. The flexibility of GNNs in handling complex data structures such as network data, coupled with their ability to effectively capture intricate relationships and dependencies among various nodes, offers unique advantages in the context of network security.

About AIZEN

The mission of CyberPoint's AIZEN team is to bring Artificial Intelligence solutions to our client's most challenging problems. AI is a critical 21st century technology and our dedicated team of Data Scientists and Machine Learning Engineers bring the knowledge and expertise to help your enterprise realize its fullest potential. We specialize in taking business challenges and translating them into material AI solutions.

Contact AIZEN

Contact us with queries about how we can support your mission today.